Objectives

Why Do We Need Objectives?

Taking the time to formulate objectives may seem daunting or like yet another time-consuming task, but objectives help both the educator and students. Objectives help educators organize content to clarify the goals of instruction, create assessments, select materials, and communicate with students what they need to know and do (2,3). For students, objectives clarify expectations, help organize and prioritize study materials, and prime them for learning by focusing their attention (2,3). When used properly, learning objectives can be an invaluable tool to help students know what to focus on when preparing for a class. They can also be used to review key concepts prior to an exam, presuming there is proper alignment between the objectives and the questions in the assessment.

Evidence from cognitive science shows that exposure to relevant words or images helps prime or prepare learners to attend to pertinent information. The use of unfamiliar items in priming is associated with an increase in neural activity; specifically, studies have shown that the use of repeated novel stimuli in priming increases visual cortical activity which may reflect attentional allocation (4). In other words, when students are presented with an unfamiliar stimulus repeatedly, their attention is drawn more acutely. Similar activity is seen in the visual cortex during exercises in selective attention in which observers are asked to find images that match a feature (shape, color, or speed). Being cued to these features increased brain activity over divided attention exercises where observers were asked to look for any changes regardless of features (5). Cues given before instruction, such as pre-tests and learning objectives, can help draw the learner’s attention to relevant information that will be given during instruction (6).

How to Write Objectives

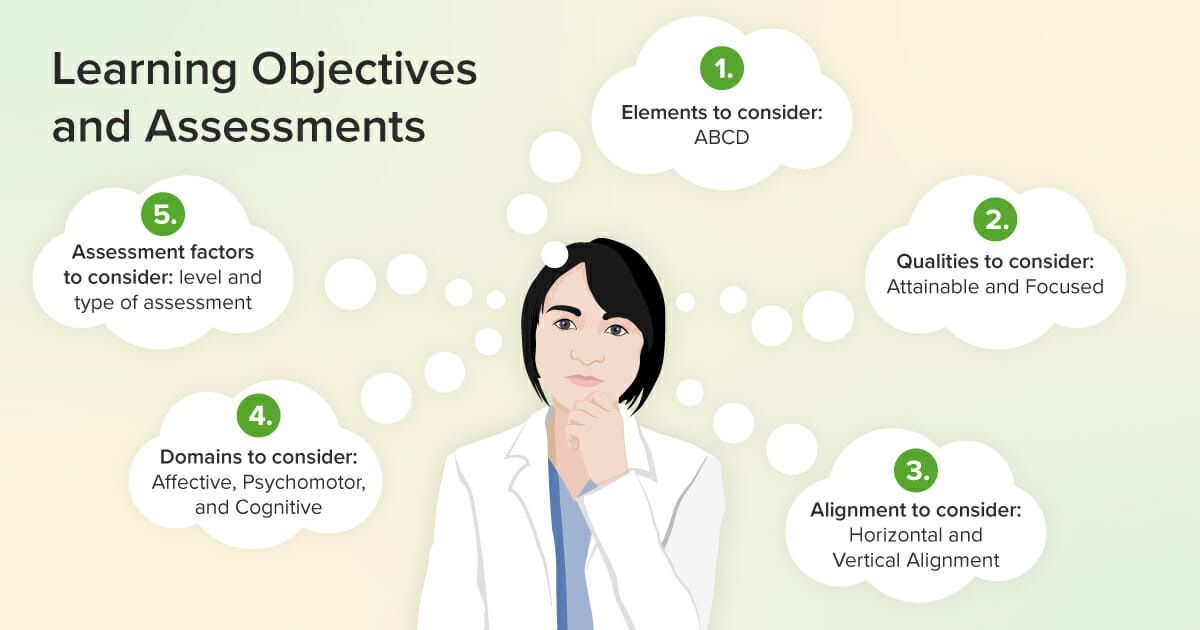

One method to writing a well-defined objective uses ABCD: Audience, Behavior, Condition, Degree (2,7). The audience is the students or learners, while the behavior is the specific, measurable behavior that uses an action verb. The condition describes any resources, interactions, or materials that may be used, and the degree gives the expected level of mastery. The objective is written from the perspective of the learner and describes what the learner will be able to do after instruction.

ABCD Model for writing learning objectives

For example, the audience says who will perform the desired behavior and under what conditions it will occur. Objectives may begin with:

“After the simulation, students will…” or

“After the workshop, participants will…”

The audience statement should be followed by a behavior that incorporates a measurable verb. Some common verbs that should be avoided are know, understand, or learn because they can not be directly observed. Instead, decide how the student will demonstrate that they know or understand – they might define, apply, or discuss the concept being studied. For example,

“After the simulation, students will communicate empathetically” or

“After the workshop, participants will create a lesson”

Be sure the behavior is the outcome, not a task list of learning experiences such as “Students will read four articles.” This statement doesn’t say what the outcome should be, only the learning experience used. Instead, this statement could be adjusted: “Students will use the results of four articles to justify a treatment plan for a pediatric cancer patient.”

The condition includes any resources, interactions, or tools the learner may have available. For example,

“After the simulation, students will communicate with a terminally ill patient” or

“After the workshop, participants will create lessons using available videos”

However, these objectives are still vague. The degree gives the expected level of mastery such as accuracy, the use of professional standards, or the expected quantity. For example,

“After the simulation, students will communicate with a terminally ill patient using the SPIKES framework” or “After the workshop, participants will create two flipped classroom lessons using available videos.”

Example of the ABCD model used with a learning objective

Another approach to formulating objectives is the SMART framework: Specific, Measurable, Attainable, Relevant, and Time-Bound (1). Similar to the method above, specific and measurable describe the observed behavior. Attainable means the objective can be attained in the time given with resources available. Relevant requires that the objective aligns with instruction and assessments. Time-bound involves giving the objective a time frame for when it will be met. Combining SMART and ABCD help formulate effective objectives that are clearly defined for learners.

Creating Effective Objectives

Writing a clear objective is just one step in creating effective objectives. Care should also be taken to ensure objectives are attainable and aligned with assessments and content. If there are too few objectives, meaning they are incomplete or inaccurate when compared to the content and/or assessments, the assessment may cover material not addressed in the objectives, which could cause students to become frustrated with the assessment and distrustful of the instructor (2). When expectations are unclear, the assessments may seem unfair or the instructor may seem unreasonable or overly tough. Clearly defined objectives accurately convey expectations to students.

If there are too many objectives, students may become overwhelmed. If there are hundreds of objectives, students have no way to discern what is nice-to-know from need-to-know. In these cases, students may not be able to attain all of the objectives and may cull material haphazardly.

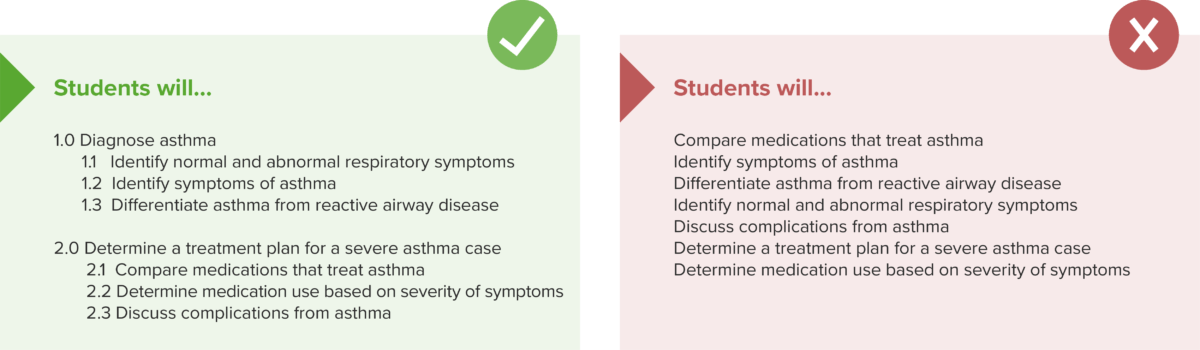

Crucially, objectives need to be attainable in the time allotted. Within that, objectives need to be focused. A student may learn 80 of 100 objectives given, but if they haven’t learned the most important 10 in that list, they may fail to achieve what the instructor expects as representative of the course or profession. In this case, it may be desirable to organize the objectives into terminal and enabling objectives. Terminal objectives are more broad performance goals (3). These are the “bigger picture” items you want students to do at the end of the instructional period. They are the skills, knowledge, and dispositions that define the need for instruction. Enabling objectives describe the skills that make up those bigger goals. These are the skills, knowledge, and dispositions that not all students have at the start of instruction but are necessary to successfully fulfill the terminal objectives.

Terminal and enabling objectives can help clarify instructional goals

The figure above contains the same objectives on both sides. The format on the right lists objectives randomly. Students have no way to know what, if anything, is more important or how the objectives relate. The format on the left groups the same objectives into terminal (1.0 and 2.0) and enabling objectives (1.1-1.3, 2.1-2.3). Even for this short list, a hierarchy clarifies for learners the expectations and what they need to accomplish to attain them. Students are more likely to attend to a short list (three to five goals) than a lengthy list of objectives (3).

If there are still too many objectives after organizing them, they can be further grouped into essential and extended. Essential objectives delineate the objectives all students must master and the extended objectives, while still important, are secondary. This delineation allows students who have the time and interest to delve deeper while ensuring all students meet the essential goals.

Aligning Objectives

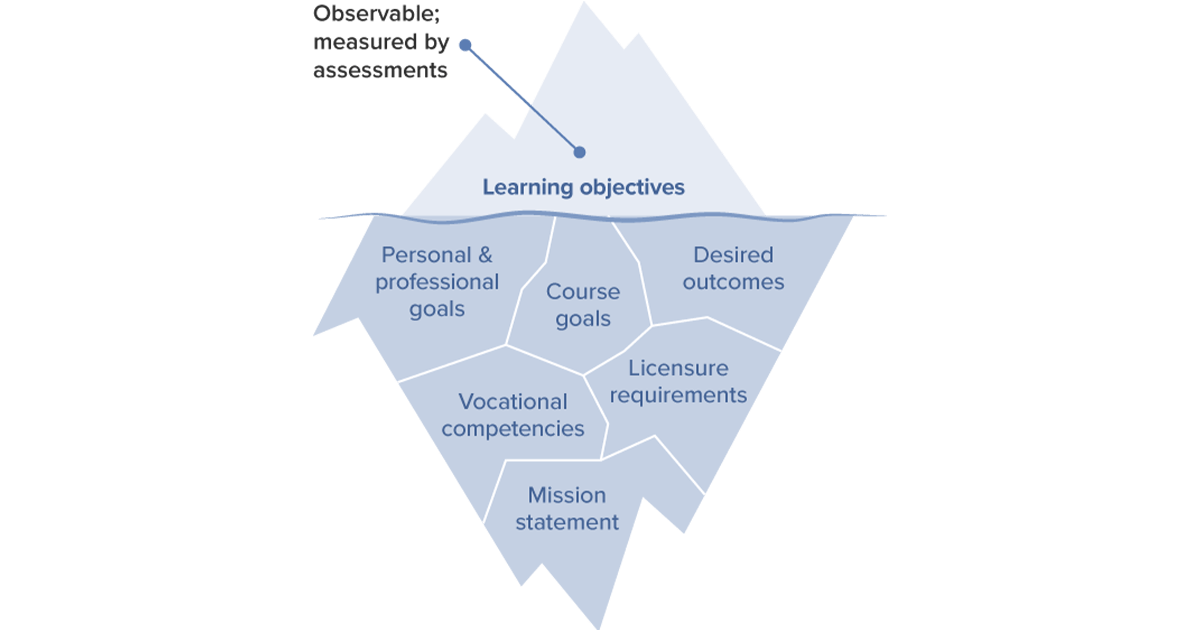

Where do objectives come from? They come from course goals, desired outcomes, personal and professional goals, and professional competencies or licensure requirements. These external goals usually involve broader statements that may focus on what a practitioner should know or what the instruction intends to accomplish. The broader goals and outcomes help define the course goals that are then broken into discrete objectives.

Learning objectives: Like the tip of an iceberg, learning objectives are observable; they are usually supported by many other goals, outcomes, competencies, and requirements.

Objectives are formed from goals, outcomes, and tasks by using an instructional analysis or task analysis. Instructors may use different approaches, but they most commonly break tasks and goals down by looking at the steps taken (performance analysis) or by examining expert thought processes (cognitive analysis) needed to complete the tasks and goals (8). For example, if an outcome states that a student will perform an Arterial Blood Gas (ABG), the task could be broken down into objectives by observing an expert or by having an expert name all the steps necessary to perform the ABG. If the goal is to have students demonstrate clinical reasoning, a tool such as “think aloud” can be used to break down all the steps in the expert’s thought process in order to discover what reasoning steps may be observed. These individual steps in the performance analysis or cognitive analysis make up the objectives needed for the student to become proficient and help align those objectives to the course goals and desired professional outcomes.

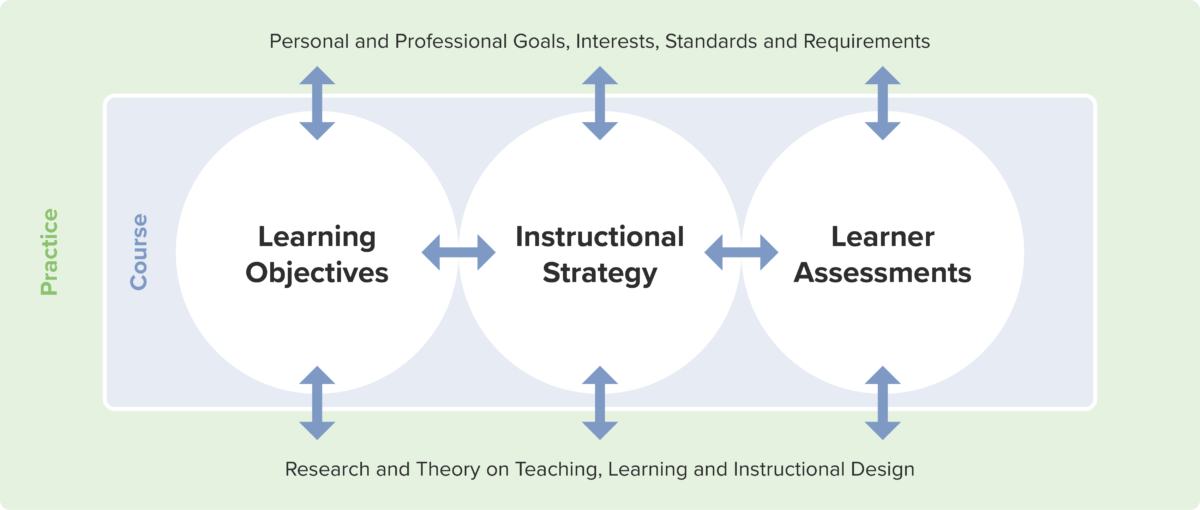

Objectives should be aligned horizontally and vertically as shown in the figure below (9). Horizontal Alignment occurs when objectives are aligned within the course and with the content, assessments, and the instructional strategy. Vertical Alignment occurs when objectives are aligned with student goals and interests, professional requirements and standards, and research on teaching, learning and instructional design. Horizontal alignment helps ensure efficiency of instruction, vertical alignment with goals and interests helps increase engagement, and alignment with research and theory helps ensure effectiveness.

Horizontal and vertical alignment, modified from Hirumi (9)

Cognitive, Affective, and Psychomotor Domains

Aligning objectives, instruction, and assessments requires defining what is expected of learners in terms of the cognitive, affective, and psychomotor domains. Much of what is discussed in writing objectives and assessments often relate only to the cognitive domain, but it is equally important in health professions education to develop the affective domain and the psychomotor domain. These domains describe the professional dispositions (values, ethics, and attitudes) and the physical skills needed to be successful in healthcare.

The Domains of Learning: Affective, cognitive, and psychomotor

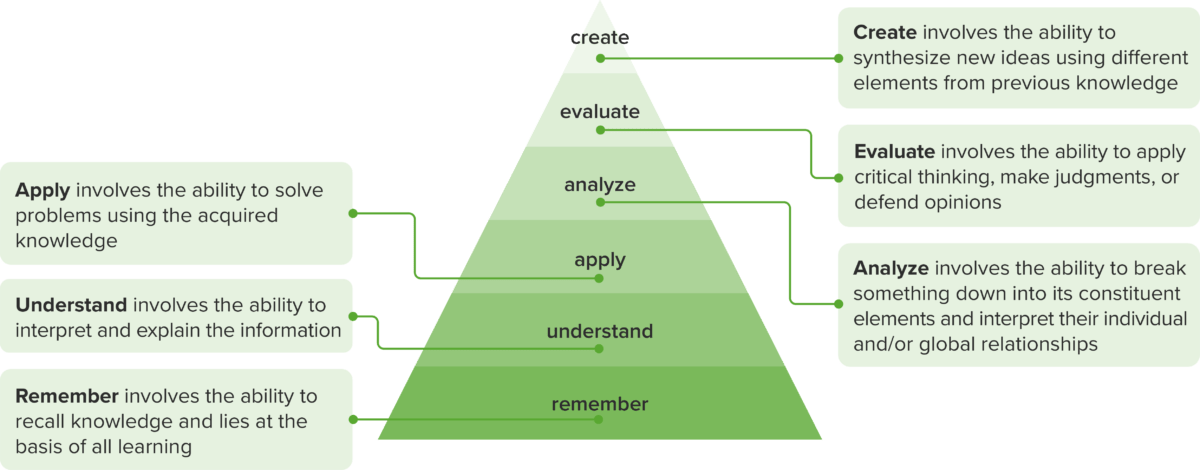

Cognitive Domain: Bloom’s Revised Taxonomy

Bloom’s revised taxonomy for the cognitive domain gives six categories or levels, ranging from foundational cognitive processes such as knowledge (e.g. identify or define) to more complex processes such as creating (e.g. design or construct). This hierarchy is helpful for scaffolding instruction and assessment as learners build skills and knowledge.

Bloom’s Revised Taxonomy for the cognitive domain

Affective and Psychomotor Domains

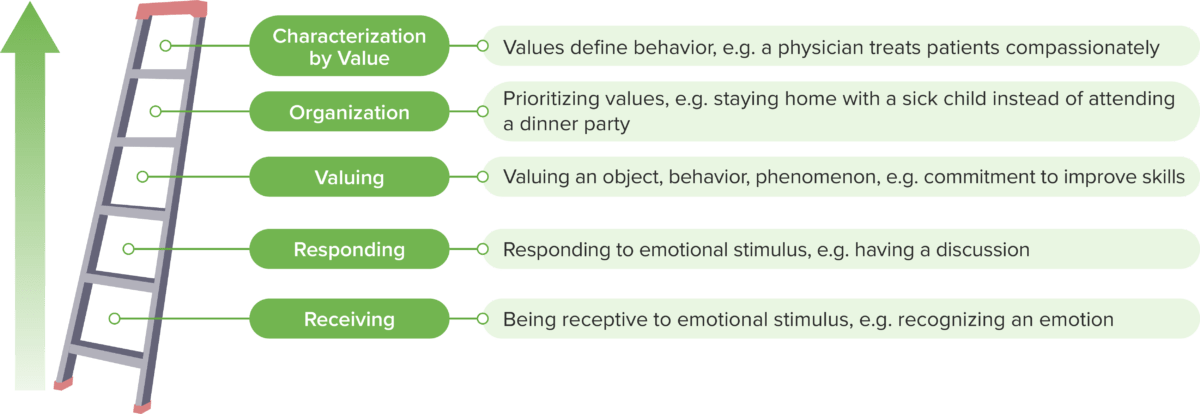

The affective domain includes emotions, values, and attitudes. While these may be harder to observe and quantify compared to cognitive skills, they are vital to developing effective physicians. Like the cognitive domain, they can also be categorized in order of complexity.

Affective domain, modified from Hoque (10)

As they begin learning in the affective domain, learners start by receiving, in which they are receptive to or can recognize a value, emotion, or attitude; this reception or recognition can be identified (as a learning objective), practiced, and assessed. As learners progress in the affective domain, they move toward characterization, whichinvolves the emotion, value, or attitude becoming a part of the learner’s character, such as a healthcare professional taking the time and energy to treat patients and families compassionately. Objectives in the affective domain can be measured by instructor observation, written reflections, or clinical observations.

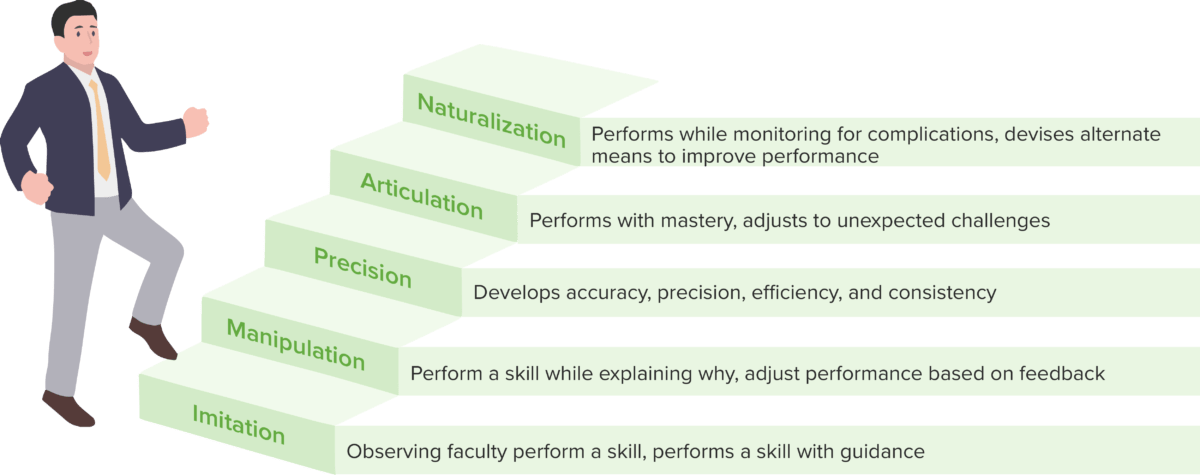

The psychomotor domain entails physical functions that make up the performance of tasks, skills, or actions (11). For example, the physical skills needed to start an intravenous line or perform sutures fall into the psychomotor domain. The Dave framework (12) below can be applied to any physical tasks necessary by health professionals as they progress from apprentice to expert. Objectives in the psychomotor domain can often be measured by observation and quality of outcomes as well as metrics such as speed, accuracy, and patient satisfaction.

Psychomotor domain, modified from the Dave framework (12)

Much like the cognitive domain, dispositions and skills in the affective domain and psychomotor domain build from one level to the next. For students to master skills, knowledge, and dispositions at the most complex levels, they must acquire competency at the foundational levels in each domain. Objectives in the affective and psychomotor domains are somewhat similar to cognitive domain objectives in the sense that whether foundational or complex, both the objective and its corresponding assessment should reflect the level of mastery expected.

Assessments

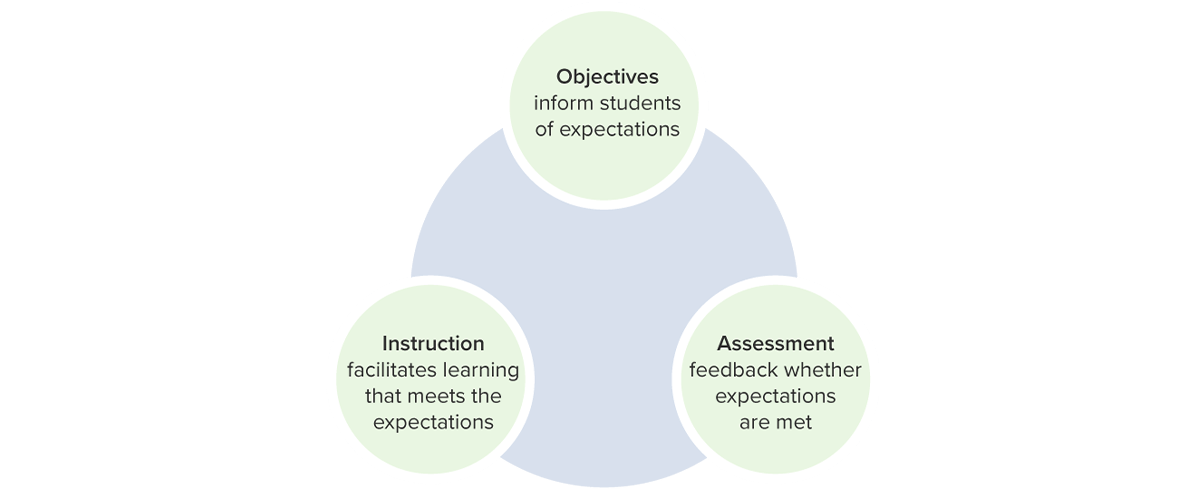

Alignment between assessments, objectives, and instructional strategy, sometimes called the Golden Triangle, is necessary for efficient and effective instruction (1,9). The objectives inform the students what the expectations are, the instruction facilitates learning that meets the expectations, and the assessments provide feedback as to whether those expectations are met. If the assessments, objectives, and instruction don’t align, not only will instruction be ineffective, but students will also likely become frustrated and disengaged. Intentional alignment helps increase student satisfaction as well as student learning.

The Golden Triangle: Alignment of objectives, assessments, and instruction

Levels of assessment

In the cognitive, affective, or psychomotor domains, the levels of assessment need to align with the objectives that they aim to evaluate.

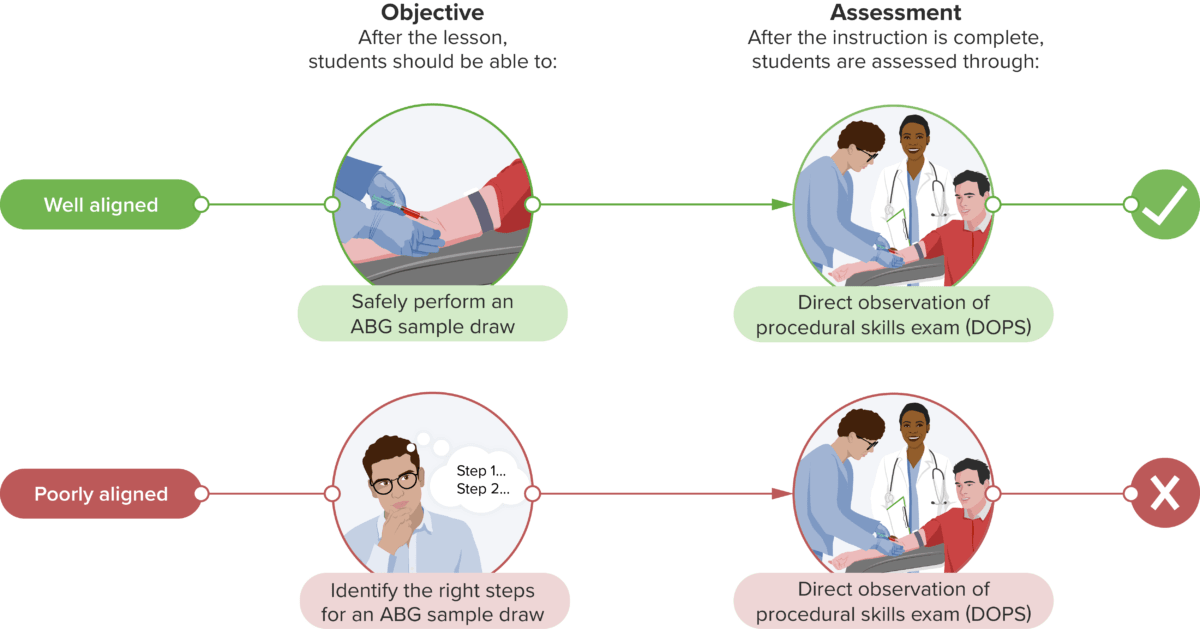

The verbs chosen in creating the objectives must not only define an observable behavior, but they must also align in complexity with the assessment (1,13). For example, if an objective states that “Students will identify signs of respiratory distress in a child under the age of 3” but the assessment demands students create a treatment plan for a child in respiratory distress, the assessment of what the student will do does not match the stated expectations in the objective. The verb in the objective, identify, is a much less complex cognitive skill than the verb in the assessment, create. The student is being assessed on a much more difficult level of complexity than the objective stated as an expectation.

Similarly, if an objective states “Students will name the steps to perform an ABG” but the assessment requires students to perform an ABG independently, a mismatch occurs, not only in level of difficulty but in the position of the objective and assessment within the cognitive domain (“name” as a verb) vs psychomotor domain (“perform” as a verb). If students must perform an ABG, the objective should state “Students will complete an ABG independently” and the instruction should take them through the psychomotor-building steps of imitation, manipulation, and precision.

Alignment of objectives and assessments. Objectives and assessments should be in the domain of learning and the level of assessment used.

For students to gain all levels of cognitive processing and for them to perform more complex tasks that medical education needs, the objectives must reflect those expectations and the instruction should prepare students for the assessment of those tasks (13).

Types of assessment

The types of assessments should align with the stated objectives as well. Assessments can take the form of written assessments, performance assessments, and portfolio assessments.

Much of medical education relies on a wide base of factual knowledge that requires actions like identify, define, or select. Factual knowledge tends to be in the foundational half of the cognitive domain taxonomy and can be assessed with written assessments such as multiple-choice questions (MCQ), matching, and short answer type questions. Not only can these types of assessment questions cover a wide range of knowledge, they also tend to be easy to grade, have high reliability, and help students prepare for licensure exams. When carefully written, these questions can provide insightful evaluative information

While MCQs may assess simple recall, context-rich questions can include clinical or lab scenarios which increase authenticity while also assessing problem-solving and clinical reasoning. Such questions, when written carefully, can do more than just assess the knowledge level of cognitive processes; however, context-rich questions are also more difficult to write. When writing questions, instructors should avoid cueing, or allowing students to pick correct answers that they may have not been able to answer without prompting (14). Extended-matching question (EMQ) formats may help minimize issues with cueing by offering more options, such as offering eight or more possible answers compared to the four or five answers that are typical with MCQ (14). To assess higher-order cognitive processes with actions such as create, synthesize, or explain, written responses like essays or discussion posts can also be helpful. While written assessments are effective for objectives in the cognitive domain and may also be helpful in assessing some affective objectives, they are unlikely to be effective for psychomotor objectives. For psychomotor and many affective domain objectives, observations of a student’s performance are standard.

Performance assessments can take the form of simulations (including standardized patients), clinical observations, mini-clinical evaluation exercises (mCEX), or objective-structured clinical examinations (OSCEs). Performance assessments allow for assessing clinical competency by observing skill and behaviors in varying levels of authenticity. Performance assessments are suitable for assessing skills, knowledge and dispositions in all three domains: cognitive, affective, and psychomotor. Since written assessments do not assess the affective or psychomotor domains effectively, given limited time and resources, cognitive knowledge is often assessed with written exams while performance assessments are prioritized for affective and psychomotor skills. While there are numerous types of performance assessments, only a few common ones are described below.

Simulations may be computer-based or use standardized patients. They allow for a controlled setting in which the assessment of skills may be targeted and, if desired, repeated until mastery is achieved (15). Simulations may provide immediate or delayed feedback, depending on the design and fidelity of the scenario, and can help students develop higher-order reasoning skills and/or practice skills.

Clinical observations may be informal or may take specific formats such as short cases, long cases, or 360° observations (16,17). Short cases involve a focused observed examination of a real patient to assess the student’s examination technique and ability to interpret physical signs (16). Long cases usually involve a longer, unobserved examination of a real patient followed by an unstructured oral examination by one or more examiners (14,16,17). 360° evaluations are comprehensive reviews by multiple evaluators that may include members of the clinical team, peers, and self-evaluation (14,17). OSCEs typically involve a series of stations, each focusing on different tasks and often including standardized patients, in which an observer scores students based on their performance (14). mCEX entail observations in a healthcare setting, often by a faculty member, that analyze the student’s competence in medical interviewing, physical examination, humanistic qualities/professionalism, clinical judgment, counseling, and organization/efficiency (18).

Portfolios are gaining popularity for their ability to assess a wide range of experiences that depict the experiences of the learner and to promote a culture of life-long learning, reflective practices, and metacognition (19,20). Portfolios show promise in increasing student knowledge, improving the ability of students to integrate theory and practice, increasing autonomy in learning, and preparing students for post-graduate settings which require reflective practices (19,21). Portfolios show the most promise when used with a flexible student-led format and the learner is guided by a mentor (22).

Assessment Purposes and Feedback

Assessments can serve multiple purposes. Assessments provide motivation and direction for future learning, serve as a mechanism for feedback, and determine advancement and licensure depending on the type of assessment given and the intent for how it will be used (14,17).

Formative assessments are low-stakes evaluations that provide feedback to students about their progress and performance. Any of the assessment types mentioned here can be used as formative feedback if they are intended to show students their progress in reference to benchmarks, provide correction, or promote reflective practices. Short cases, informal clinical observations, and mCEX are often used for formative assessments. When used in a low-stakes or no-stakes setting such as practice tests, assessments such as MCQ provide students with quick knowledge checks and also reinforce learning. Formative assessments that incorporate spacing and interleaving such as U-Behavior, where students were graded on their use of a series of spaced practice quizzes that encouraged interleaving, can also support learning and increase metacognition (23). Formative assessments are ideal for enabling objectives, i.e. objectives that are intended to build skills and knowledge.

Summative assessments are often high-stakes assessments that may determine (in part or whole) the student’s ability to advance in the program or determine licensure (14,17). While summative assessments may provide feedback for future learning, they primarily show to what extent learning has occurred and standards have been met. Summative assessments may reflect all objectives but should reflect terminal objectives at a minimum, i.e. the objectives that define the major skills, knowledge, and dispositions for the period of instruction. Because summative assessments typically have an impact on student progress, care should be taken that measures and instruments used are valid and reliable (14).

Numeric grades are a quick unambiguous way to provide feedback to students. While short response questions may be easy to grade and their ease of use and wide coverage of material often makes them extremely reliable, there is also a movement toward pass/fail to encourage a more holistic approach to determine competency rather than relying on a single score (24). In determining ratings or grades for other types of assessment, educators frequently have concerns about the objectivity and reliability of scoring. The use of checklists, rubrics, and rating scales and the use of multiple evaluators can increase the objectivity and reliability of such assessments (16).

Checklists are useful for competencies that can be broken down into specific skills or behaviors that can be marked as either done or not done (16). These skills and behaviors often align with application level objectives in the cognitive domain (demonstrate, implement, interpret) as well as most observable skills in the affective and psychomotor domains. Checklists are sometimes used in combination with rating scales which ask evaluators to determine the subject’s overall performance (25). While checklists may seem more objective, rating scales have been shown to detect levels of expertise more effectively, especially when administered after evaluator training (25). Checklists are relatively easy to administer, but if used for summative assessments, they can take extensive time and resources to develop since they require the consensus of a panel of experts to determine criteria for essential performance (16).

Like checklists, rubrics also examine competencies that can be broken into specific skills or behaviors; however, rather than determine merely if skills or behaviors are present, rubrics provide a scale to determine what level of expertise has been achieved for each skill or behavior (26). Each behavior or skill is rated from low to high, typically on a 3 or 5 point scale. A low rating indicates either the skill or behavior is non-existant or unsatisfactory, while a high rating indicates exemplary performance. Rubrics help make the instructors’ grading explicit and more streamlined while also conveying to the student opportunities for improvement, i.e. what is expected for exemplary performance (26).

Practical Use for Medical Educators

Design Evaluation Chart

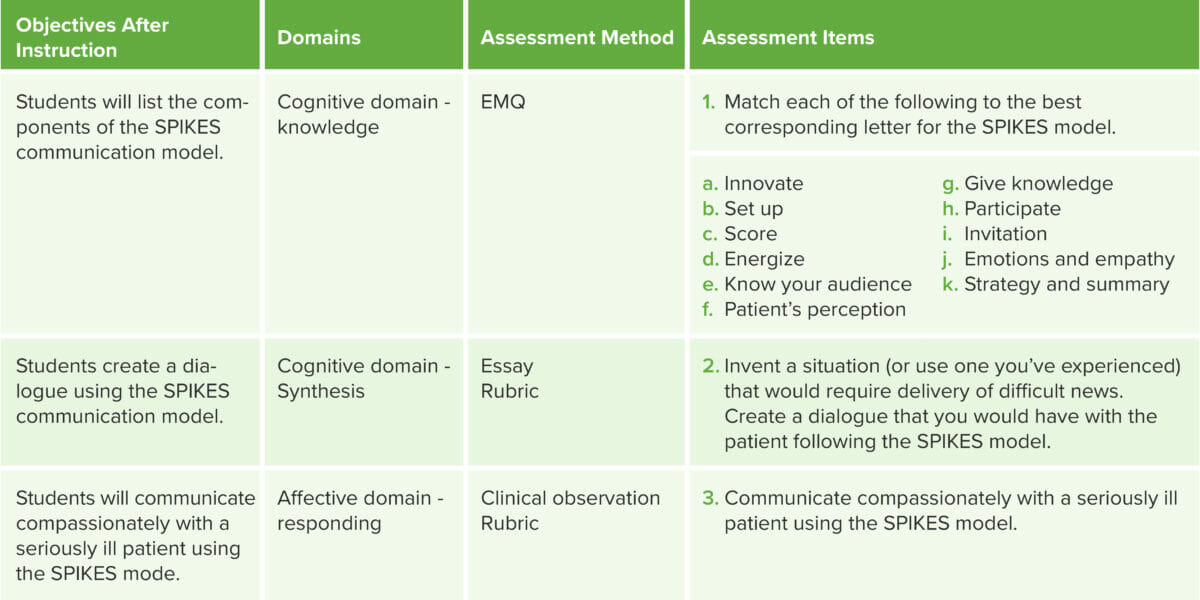

Instructors may find it daunting to consider all of the domains, levels, and frameworks for assessments and objectives while also ensuring vertical and horizontal alignment, objectivity, and validity. One way to help organize all these components to ensure alignment is to make a table, sometimes called a design evaluation chart (3) or learner assessment alignment table (27).

Learner Assessment Alignment Table. Used to align objectives, domains of learning and assessments

Every objective should have at least one assessment item and every assessment item must align with an objective. Including the domain and assessment method in the table helps ensure that the components align among domains and assessment types (e.g. written assessments should generally not be used for objectives in the psychomotor domain). Creating a table such as this gives a quick visual representation of all the elements needed to align for effective and efficient instruction.

Instructor Perspective

- Create well-defined objectives using measurable verbs to define observable behaviors.

- Ensure there are a reasonable number of objectives: not too many, not too few.

- Confirm that objectives align with course goals, professional competencies, national standards, and research on teaching and learning.

- Understanding the domain for your objectives will help inform assessment

- Fit the right type of assessment to the objective

- Determine what kind of feedback you will give to students for each assessment

- Select the suitable evaluation tool to measure students’ performance on assessments (rubric, checklist…)

Student Perspective

- Don’t overlook the course syllabus: use objectives to guide your studies

- Pay attention to the types of verbs used

- Ask what type of assessment(s) will be used if it isn’t stated

- If checklists or rubrics are provided, use these to help guide your study and practice

Review Questions

1. What do learning objectives describe?

a. Instructional activities that will take place

b. Types of assessment used

c. Observable behaviors that happen as a result of instruction

d. The content that will be used for instruction

2. Which words are desirable to use in a learning objective? [Select all]

a. Know

b. Discuss

c. Evaluate

d. Understand

e. Compare

3. Learning objectives should align with [Select all that apply]

a. Assessments

b. Professional competencies

c. Course goals

d. Licensure requirements

4. Learning objectives in the affective and psychomotor domains are usually best assessed using [Select all that apply]

a. Multiple choice or single response questions

b. Rubrics and checklists

c. Rating scales

d. Short answer questions

Answers: (1) c. (2) b,c,e. (3) a,b,c,d. (4) b,c.

Online Seminar

This online seminar and its accompanying article will focus on learning objectives and assessments, which serve as essential elements to measure learning and ensure that students have grasped and acquired the intended concepts. Educators rely on observable behaviors and demonstrated knowledge as indicators of students’ skills, knowledge, and dispositions, which may include creating a concept map, demonstrating suturing skills, or communicating compassionately with patients. If a student could not successfully perform these objectives before instruction and can perform them after, it is presumed learning has occurred. These desired observable behaviors are defined and communicated in learning objectives, which are short statements of what a learner will be able to do as an outcome of instruction. Likewise, these desired skills and knowledge are measured by assessments, which are aligned with the objectives in order to optimize learning.

Watch the seminar recording:

Would you like to learn more? Explore the Pulse Seminar Library.