Playlist

Show Playlist

Hide Playlist

Sampling Distributions for Proportions and Means

-

Slides Statistics pt2 Sampling Distributions for Proportions and Means.pdf

-

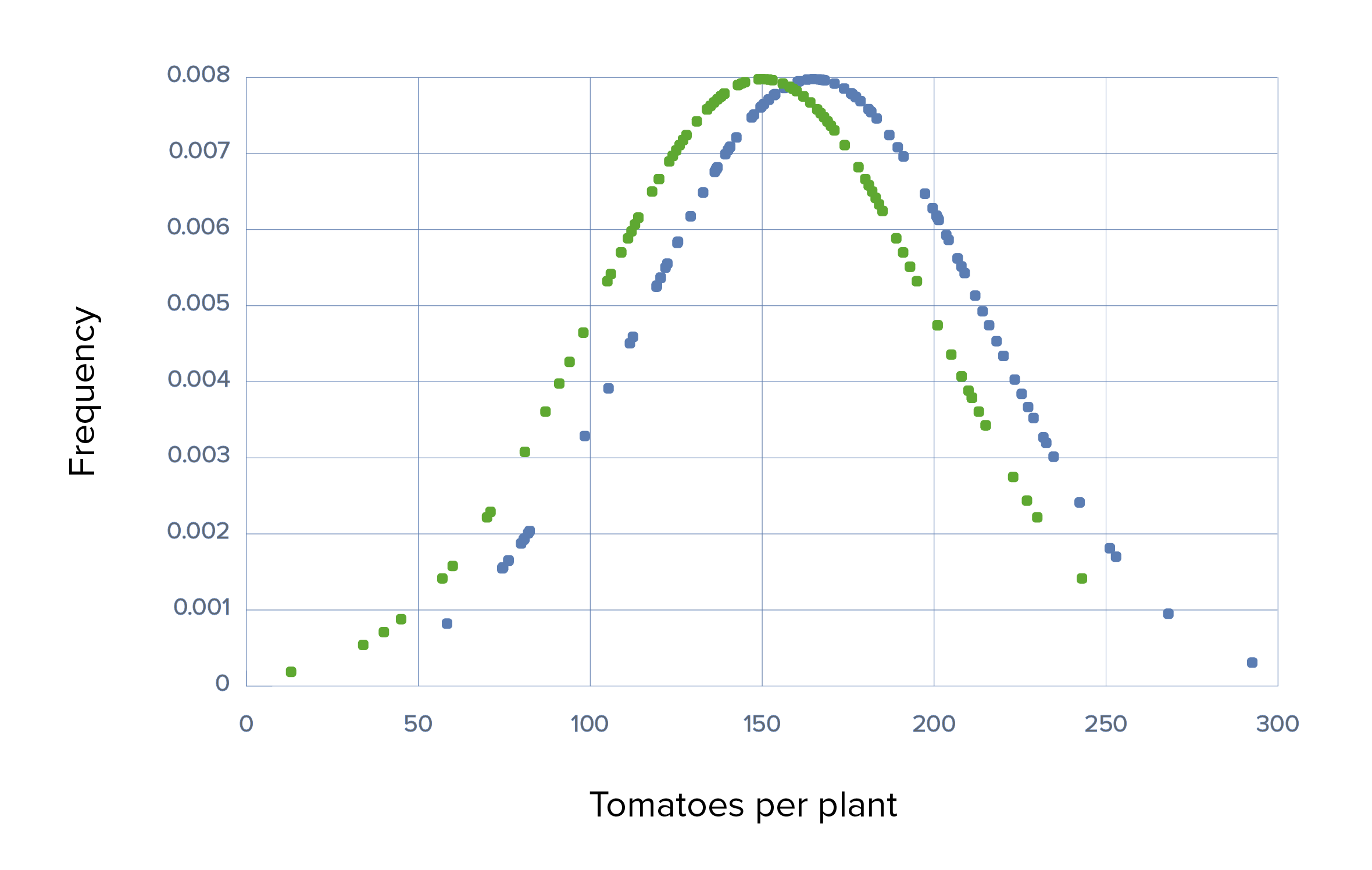

Download Lecture Overview

00:01 Hello, welcome to statistics 2. 00:04 Statistical inference and data analysis. 00:07 I'm David Spade and I'll be instructing this course. 00:09 We're gonna begin with sampling distributions for proportions and means. 00:13 So what are sampling distributions? We'll let's start with the basics. 00:18 For each sample we'll draw, we'll calculate some statistic. 00:22 Each of these statistics is in itself, a random variable and as such has a probability distribution. 00:29 This comes from the fact that the statistic calculated from different samples differs and we have to be able to model this variability. 00:37 This variation is termed sampling error or sampling variability. 00:41 We'll discuss two types of sampling distributions in this lecture. 00:45 The sampling distribution of a proportion and the sampling distribution of a mean. 00:50 So let's start with the sampling distribution of a proportion. 00:53 Suppose we survey every possible sample of 1007 US adults and ask them whether or not they believe in evolution. 01:01 What can happen? On one survey, 43% might say they believe in evolution. 01:07 While on another, we might have 47% and on another, we might have 42 and so forth. 01:13 Each sample is going to produce a different sample proportion. 01:16 So now the question becomes, how can we describe the variation from sample to sample? We can make use of what's called the sampling distribution of the proportion. 01:25 And this is designed to model what we would see, if we could actually see the proportions of all these samples of the same size. 01:32 The sampling distribution shows how the proportion varies from sample to sample. 01:37 We can use the normal model to describe the distribution of a sample proportion. 01:42 How do we do it? Well in any situation in which a normal model is useful, the key pieces of information are the mean and the standard deviation of the variable that's being modeled. 01:52 Here, let's let p hat denote the sample proportion and let p be the actual proportion in the population. 01:58 In other words, we're looking at the relative frequencies of successes in our sample and a group of independent Bernoulli trials and we're looking at the relative frequency of success in the population So let's let n denote the sample size. 02:13 Then what we can find is that the expected value of the sample proportion is just p and the standard deviation of the sample proportion is the square root of p times 1 minus p over n. 02:23 Knowing this information, we can say that as long as the sample size is reasonably large, the sample proportion follows a normal distribution with mean p and standard deviation square root of p times 1 minus p divided by the sample size. 02:37 When is it appropriate to use the normal model for the sampling distribution of the proportion? Well, we need to make sure that we have three conditions satisfied in order to use the normal model for sample proportions. 02:48 The first condition is the independence assumption. 02:51 That states that the individuals in the sample must be independent of each other. 02:56 Two, the 10% condition. 02:58 We need the sample size to be less than 10% of the population size. 03:02 And three, the suceess/failure condition, we've seen this before. 03:07 Just like in the normal approximation to the binomial distribution, we need n times p at least ten and n times one minus p at least ten. 03:16 So let's look at an example. 03:18 Suppose we know that 45% of the population believes in evolution. 03:23 We randomly sampled 100 people and asked them if they believe in evolution. 03:27 What is the probability that at least 47% percent of the respondents in this sample say that they believe in evolution? First of all, we need to ask ourselves: Is the normal model appropriate? Well, the respondents are randomly sampled, so it seems reasonable to assume independence. 03:45 Two, the 10% condition. 03:47 100 is much less than 10% of the total population. 03:51 And three, the success/failure condition. 03:54 We have n times p is one hundred times 0.45 or 45 which is bigger than 10, so we're good there. 04:00 n times 1 minus p is equal to 100 times 0.55 which is 55 - which is bigger than 10, So we are good there. 04:08 So we have all three conditions satisfied and now we can use the normal model for the sample proportion. 04:16 So let's continue. 04:18 We need to find the mean and we need to find the standard deviation. 04:21 We can find that the expected value of the sample proportion is just the population proportion or 0.45. 04:28 We find that the standard deviation of the sample proportion is the square root of 0.45 times 0.55 divided by 100 or 0.0497. 04:38 So let's find the probability of observing the sample proportion that's at least 0.47. 04:44 So what we're interested in is the probability that p hat is at least 0.47. 04:49 So we can translate that to a z score and we can, by subtracting off the mean and dividing by the standard deviation. 04:56 So we're looking at the probability that z is at least 0.47 minus 0.45 divided by 0.0497 or the probability that z is at least 0.4. 05:08 So that's the probability that z is at least 0 minus the probability that z is betwen 0 and 0.4. 05:15 And so we get 0.5 minus 0.1554 or 0.3446. 05:21 So that's where it looks like. 05:23 Here's the picture of the normal curve with the area shaded in that we're interested in, that's the green area. 05:29 And we see that that probability is not real small. 05:34 Now we can move on to the sampling distribution of a mean and modeling sample averages. 05:39 The idea here is similar to the idea for sample proportions. 05:43 Suppose for instance that we gathered one thousand randomly selected apples and weighed them. 05:48 And suppose we get an average weight of 0.35 pounds. 05:52 We gather 1000 more randomly selected apples and we get an average weight of 0.31 pounds. 05:59 If we take all the possible samples of size 1000 of apples, we would get different average weights. 06:06 This is the sampling variability in the mean and we need to be able to model that. 06:11 So let's look at the fundamental theorem of statistics, also known as the central limit theorem. 06:16 What this states is that the sampling distribution of any mean becomes nearly normal as the sample size grows. 06:22 All we need is for the observations to be collected independently and with randomization. 06:27 We don't even care about the population distribution. 06:30 As long as the sample size is large enough, it doesn't matter whether the population distributrion is symmetric or skewed. 06:36 The sample mean has an approximate normal distribution and we can use the normal model to describe the distribution of the sample mean. 06:43 So when can we use the central limit theorem? Well just like we had for the sampling distribution of the proportion, we have certain conditions that need to be satisfied in order to use the central limit theorem to find the sampling distribution of the mean. 06:56 First, we need independent groups. 06:58 so the sampled values must be independent of each other. 07:01 Second, we need to have a large enough sample. 07:04 In other words, we need the sample size to be reasonably large, typically that threshold is at least 30. 07:11 And again, we have a 10% condition where the sample size has to be no larger than 10% of the population size. 07:18 If these conditions are satisfied, let the data come from a distribution that has mean mu and standard deviation sigma. 07:25 Then if x bar is the sample mean and n is the sample size, then x bar follows an approximately normal distribution with mean mu and standard deviation sigma over the square root of n So let's do an example of it. 07:40 Suppose we take a random sample of a 100 apples from an orchard where there are over 10,000 apples and the average weight of an apple there is 0.34 pounds with a standard deviation of 0.1 pounds. 07:52 What's the probability that the average weight of our 100 apples is less than 0.32 pounds? Before we do anything, we need to check the conditions. 08:00 So let's start with independence. 08:02 The apples are randomly sampled, so independence seems like a reasonable assumption here. 08:07 The sample size condition. 08:09 Well our sample size is 100 which is much larger than 30, so we're okay here. 08:14 And the 10% condition, One hundred apples is much less than 10% of the population of apples in the orchard. 08:20 So we're okay there. 08:21 All these conditions are satisfied and so we can use the central limit theorem. 08:25 Alright, so let's do it. 08:27 If x bar is the average weight of an apple, then by the central limit theorem, x bar follows an approximately normal distribution with mean mu equals 0.34 pounds and standard deviation sigma over the square root of 100 or 0.01 pounds. 08:42 So what we want is the probability that x bar is less than or equal to 0.32, so we translate that to a z-score. 08:50 and we get the probability that z is less than or equal to 0.32 minus 0.34, observation minus mean, divided by 0.01, the standard deviation. 09:00 Or in other words, the probability is that z is less than or equal to minus 2 or z is a normal (0,1) random variable. 09:07 this is equal to the probability that z is bigger than or equal to zero minus the probability that zero is less than or equal to z, is less than or equal to two, so we get 0.5 minus 0.4772 or 0.0228 so the following picture is gonna illustrate the area under the normal curve. 09:24 See there's not very much of it. 09:26 So what that tells us is that observing an average for a hundred apples of 0.32 pounds or less is not that common when the mean is actually 0.34 pounds. 09:38 So let's talk a little bit more about variation. 09:41 Means vary less than individual values. 09:44 This is intuitive as groups get larger, their averages should be more stable. 09:48 Larger groups are typically more representative of the population as a whole. 09:52 Their averages should be pretty stable around the true population average. 09:56 It's far more likely that you're gonna get a strange individual observation than it is to get a strange average of a thousand observations. 10:04 In other words, it's more likely to get an apple that weighs less than 0.2 pounds than it is to get a thousand apples whose average weight is less than 0.2 pounds. 10:15 This is illustrated by the central limit theorem, where as the sample size increases, the standard deviation of the sample mean goes down cause remember, it's sigma divided by the square root of the sample size. 10:26 So as the sample size increases, the standard deviation of the mean gets smaller. 10:31 Let's look at a quick example. 10:33 What if we collected 400 apples from the orchard instead of a hundred? Our standard deviation goes from 0.01 to 0.1 over the square root of 400 or 0.005. 10:44 So our standard deviation got smaller when we took a larger sample size. 10:48 And this is always going to happen with means. 10:51 What can go wrong with sampling distributions? Well first, don't confuse the sampling distribution with the distribution of the sample. 10:59 The sampling distribution is the distribution of the statistic that comes from the sample. 11:04 Beware of observations that are not independent. 11:06 The central limit theorem does not apply to observations that are not independent. 11:10 Be careful of small samples especially from skewed distributions. 11:14 As the sample size increases, the central limit theorem in the normal approximations work well for data from any distribution. 11:22 However, if we have a population distribution that is really far from normal and a small sample from this population, then the normal approximation is gonna perform very poorly. 11:31 So, what have we done? Well we talked about what a sampling distribution is and we talked about the sampling distributions of both the sample proportion and the sample mean. 11:42 We did a couple of examples, one of each. 11:45 And then we describe the things that can go wrong with the sampling distributions of the proportion and the mean. 11:50 These sampling distributions are gonna be very important throughout the rest of this course. 11:55 So make sure that you're familiar with them and very comfortable using them as we move forward. 12:00 This is the end of lecture 1 and I look forward to seeing you back for lecture 2.

About the Lecture

The lecture Sampling Distributions for Proportions and Means by David Spade, PhD is from the course Statistics Part 2. It contains the following chapters:

- Sampling distributions for proportions and means

- Using the Normal Model for Proportions

- The Central Limit Theorem

- Illustrating the Normal Curve at Work

Included Quiz Questions

Which statement accurately describes a sampling distribution?

- The sampling distribution refers to the distribution of the statistic calculated from many random samples of the same size.

- The sampling distribution refers to the distribution of the individual values in a random sample.

- The sampling refers to the value of the statistic obtained from one sample.

- The sampling distribution and the distribution of individual values are the same for any sample size.

- The sampling distribution refers to the standard deviation of the statistic calculated from many random samples of different sizes.

What is not one of the conditions necessary for the normal model to approximate the distribution of a sample proportion?

- The sample size must be at least 10% of the population size.

- The individuals in the sample must be independent of each other.

- We must have an expectation of at least 10 successes.

- We must have an expectation of at least 10 failures.

- We must have at least 10 more successes than failures.

What is not true regarding the central limit theorem?

- The central limit theorem requires that the population distribution is normal.

- The central limit theorem states that the sampling distribution of the sample mean gets closer to the normal distribution as the sample size gets larger.

- The central limit theorem relies on the assumption that our sample is drawn randomly.

- The central limit theorem states that the variation in the sample mean is smaller than the variation in individual values.

- The sample size must be smaller than 10% of the population size.

Consider a random sample of size 100 with a mean of 5 and a standard deviation of 10. What are the mean and the standard deviation of the distribution of the sample mean?

- The mean is 5 and the standard deviation is 1.

- The mean is 5 and the standard deviation is 10.

- The mean is 5 and the standard deviation is 0.1.

- The mean is 0 and the standard deviation is 1.

- The mean is 100 and the standard deviation is 1.

What is true regarding the sampling distributions of means and proportions?

- The use of the normal model as an approximation to the sampling distribution of the mean works well for small samples, provided that the underlying distribution looks fairly close to normal.

- The use of the normal model as an approximation to the sampling distribution of the mean works well for small samples from highly skewed distributions.

- The use of the normal model as an approximation to the sampling distribution of the mean or proportion works well even when the data is not independent.

- The sampling distribution is the same as the distribution of the sample.

- The use of the normal model as an approximation of the sampling distribution of the mean works best for medium-sized samples.

If the population size is 10,000 then what sample size is required to meet the 10% condition?

- 1,000

- 10

- 100

- 10,000

- 500

In which case is the success/failure condition met?

- N= 25, P= 0.5

- N= 18, P= 0.5

- N= 8, P= 0.5

- N= 2, P= 0.5

- N= 1, P= 0.5

According to the central limit theorem, what sample size is sufficient to assume that the data is normally distributed?

- 30

- 40

- 20

- 15

- 10

Customer reviews

5,0 of 5 stars

| 5 Stars |

|

5 |

| 4 Stars |

|

0 |

| 3 Stars |

|

0 |

| 2 Stars |

|

0 |

| 1 Star |

|

0 |